all information might evolve since the release of the content.

each description could be missundestood due to the learning fact.

if any information is not up to date or false, comment ! its free and open source !“let’s work together , result will be better !”

overview of the project

The purposes of this project is to provide connectivity and management to severals screens across a room ( dedicated for meetings, for instance).

It is based on soo ( see so3 Operating System topic and soo topic ) witch already has a very light weight graphics support.

The main goal of the digital room is to transfert images and video content to each SOO instance in the same environement with a manager in order to control every content.

as the operating system is working on Raspberry Pi ,adding the low cost, techical aspects (has HDMI ouputs, wifi, 4 cores, gpu …) and open source ressources avaliable on it. it was the logical choice for this project that working on the Rpi.

quik resume of project’s topology

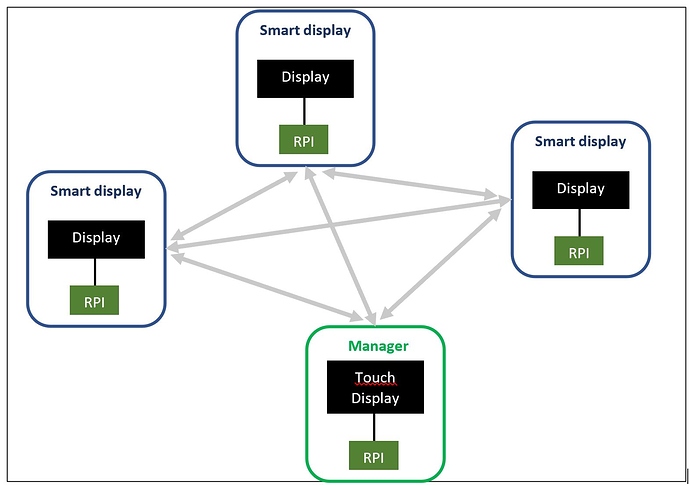

SOO use a mesh network topology, providing a direct communication to each device from on of them. here is how the topoplogy look likes :

As the image show, each screen is HDMI wired to the Rpi (witch is running a SOO instance). it is imporant to identify 2 different roles inside this network :

- manager : only one is necessary. it is described as the top-level device. His role is to manage the screens diffusion, so it handles the management of each screen by seeing, control and manage flux of each one.

- smart display : The smart display is described as the mid-level / low-level device. His role is to diffuse content according to the instruction provided by the manager and independently manage the content diffused between all clients around one smart-display.

it apear clearly that this project needs video performance in order to stream. so, before the guide lines , lets get some informations from the Rpi about his video performance.

Rpi informations

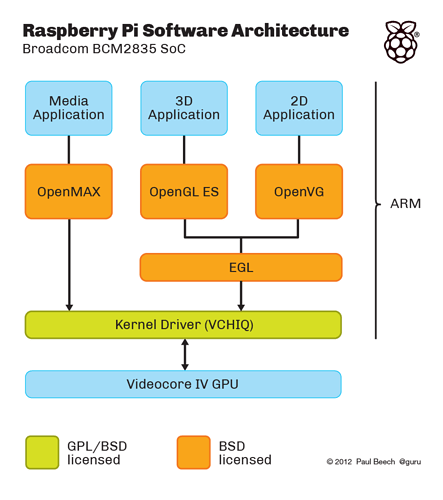

the Rpi SoC has a GPU ( or videocore). this provides a dedicated interface and hardware acccelerated softwares.

as this article explain, the best video player on an Rpi is OMXplayer. it is and hardare accelerated command line videoplayer. it assumes a less than 5% charge on cpu. and it is based on openmax.

Openmax is the most eficient way to get an hardware accelerated software using the gpu :

as the image shown, using openmax is reducing the multiple layers to the kernel driver linked to the VC.

when a raspberry pi OS is running a desktop environnement on screen , it use a X server that is using glamor witch refer to opengl layer.

so when you call omxplayer, it seems that omxplayer override the display content by using the parralel openmax “canal”.

openmax , is also a programmable ! (some books here )

adding to that, ffmpeg is a perfomant and cross-plateform tool dedicated for streaming and other video application and it is compatible with the RPI.

this provide two tools that cool be intrgrated to the project and could provide an archivable solution.

developpement guidelines :

assuming the topology set before. here is some guides line to release the project and share video.

the screens control

the initial idea is to provide to the manager the power to control the screen with differents status :

- Neutral: each smart display diffuses the content independently relative to the connected

client(s). - Slave: each smart display diffuses the content of the smart-display set as master.

- Master: the content of the smart-display is diffused on every other smart displays.

- (binomial-Master: a smart display is diffused to a smart display set as binomial slave)

- Binomial slave: a smart display diffuses the content provided by a smart-display set as

binomial master)

as seem before , using openmax could provide a video player that is hardware accelerated. and it seems to overrride the content that is displayed on screen. this could be an implicit way to control the different status by the manager.

ffmpeg could ensure the encoding and audio to record and stream the content.

about the manager touch screen / application layer

the manager is a screen that saw others screens. so it has a grid that shown direct activity of the other ones. it is not necessary to create traffic congestion with video one each screens’ canvas, but simply use a screen capture as fbi command could be adequate.

the graphical layer is using littlevgl, littlevgl doesn’t implement video content , but has canvas that is useable and might be programmed behind ( with openmax ? ) to provide video inside a canvas.

the client access :

more interaction will be better , so here is some guideline to ensure the client activity around one smart display.

using a web server like lighttpd could be useful to provide access to the device from a web page. is could use cgi scripts to get a possibility to share documents ( upload and download ) and displayed on the screen with a web browser ( or directly inside the canvas of littlevgl if possible).

the main advantage with using the web browser is to get some flexible way to make apps for collaborative works or make this project working with other possibilities ( such as bee screens/pimp my wall).

Another evolution will be tu use some Pi Zero’s to emulate a USB storage despite using a webpage connected to the network.

Note : this is not quite clear , but this overview/guideline need each device to be connected to the WIFI ( directly or using a dedicated soo device.